Hosting Multiple Websites on a Single Alibaba Cloud ECS Server

In this tutorial we are going to learn how to run two or more websites using the same Alibaba Cloud Elastic Compute Service (ECS) Instance using Docker Compose and a Reverse Proxy. I’ll show two options to achieve that at the end, one option is to run it directly on the host, and the other one using Terraform to orchestrate it all.

Why This Approach?

In most of the cases, when you get an Alibaba Cloud ECS instance, you only plan to use it for only one website or application. But sometimes, you will need to use it for something else, like sharing photos with the family, or launching a new blog, or basically anything that will require another ECS instance to host and serve that content. This solution, although recommended for stability, is sometimes overkill. You don’t really need a whole new instance to run a static site or a tiny blog. Instead, you can host them in the same machine and cut some costs by sharing resources.

If you already know that your server can potentially serve more than one website, and if you want to make the most of your ECS instance, there are multiple solutions to handle different content in one single instance ECS:

Subfolders (“example.com/web1”, “example.com/web2”, “example.com/web3”)

Port-based Virtual Hosting (“example.com:80”, “example.com:8080”, “example.com:8181”)

Subdomains (“web1.example.com”, “web2.example.com”, “web3.example.com”)

Name-based Virtual Hosting (“web1.com”, “web2.com”, “web3.com”)

Name-based Virtual Hosting

The best (and more elegant) solution here is to use Name-based Virtual Hosting, as it helps you to use better naming for your sites. Imagine for a second that both Microsoft and Google decides to host their websites on my server. The first step would be to update both “microsoft.com” and “google.com” DNS “A” Records to point to my ECS instance public IP.

And here is when the question appears. If visitors of both websites are being directed to the same IP, how do I distinguish the page to serve each request? Here comes the HTTP Headers, which fields are components of the header section of the HTTP Request. They define the parameters of an HTTP transaction. Let’s look at the example below:

GET / HTTP/1.1

Host: microsoft.com

Connection: keep-alive

Cache-Control: no-cache

User-Agent: Chrome/66.0.3359.139

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

Accept-Encoding: gzip, deflate, br

Accept-Language: en-AU

As you can see, the Host parameter (mandatory since HTTP/1.1) in this HTTP Request is asking for “microsoft.com”. With that information, my server knows which page to serve. In this case is the URI (according to the GET request) of microsoft.com.

Luckily for us, we are in a moment where no web browsers are left using the HTTP/0.9 version, where the request use to cover only the GET part and nothing else about the Host.

Most commonly, both Port-based and Name-based Virtual Hosting are achieved using config files on the host inside a folder named sites-available and linking them at sites-enabled. These config files describe what domain name is used (such as, web1.com) and where the website is stored in the disk (such as, /var/www/html/web1.com/).

However, for security reasons, that will not be using this approach in this tutorial. The approach we are going to use in this tutorial for deploying Name-based Virtual Hosting is using containerization and a Reverse Proxy to manage the requests. For me is both a step-up in terms of complexity and to meet DevOps Best Practices.

Virtualization / Containerization

Nowadays, software such as Docker performs operating-system-level virtualization also known as containerization, where, from the running programs point of view, they look like real computers. The benefit of using this is the isolation that occurs between services running in different containers, as a website running in one container won’t have any connection (by default) with a website running in another container.

So, in this way multiple websites can run in containers (Operating Systems) at the same time on one ECS instance, and be completely unaware of each other. They will even expose the port 80 the same way they would do if they were running in the host directly. Then, Docker will assign a random available port in the host as a gateway.

Reverse Proxy

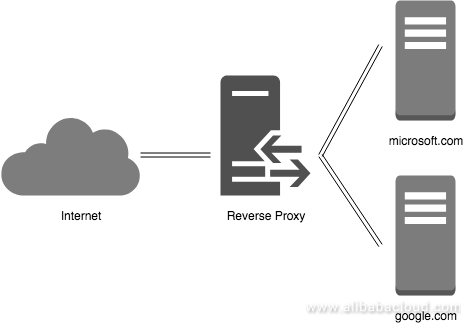

And you are right, containerization itself still doesn’t solve the problem of handling the HTTP Requests to give the proper website to the visitors of each site. That’s why we need a Reverse Proxy, a service that can also run as a container and intercepts the requests to send them to the correct place, making it the main part for handling Virtual Hosting.

The following diagram shows a reverse proxy taking requests from the Internet and forwarding them to servers in the internal network. Visitors making requests to the proxy may not be aware of it.

My favorite solution for this is neilpang/nginx-proxy, a fork made by the developer “neilpang” from “jwilder’s” original project. This fork also includes “acme.sh” in order to automate the process to get SSL as well. This turns out to be a really good Reverse Proxy to protect and secure your sites at the same time.

According to the official README, this nginx-proxy “sets up a container running nginx and docker-gen. This generates reverse proxy configs for nginx and reloads nginx when containers are started and stopped”. So basically it keeps track of all the containers running on the host and creates rules in real-time when more containers are added.

Putting All the Parts Together

Ok! So we know we need a Name-based Virtual Host solution which will run on Docker, having a Reverse Proxy and at least 2 websites running on it. As the title of the how-to says, everything is going to get wrapped using Docker Compose, an amazing tool for defining and running multi-container applications, which for our use-case is the perfect fit.

Each container in a docker-compose.yml config file is called “service”, and goes into the services array in a YAML format. The services in our file will be:

- proxy

- web1 (web1.com)

- web2 (web2.com)

For simplicity’s sake, I’m using the standard and unmodified official image of PHP 7.2 with Apache (php:7.2-apache) in both web services. “web1” and “web2” will show, therefore, the generic message from Apache telling you that “It works!”. Something that you can just change by entering by command line into the container with docker exec -it web1 bash.

Running on an ECS

Directly on the host

If you already have an instance, then you should follow the official documentation to Install Docker, and then create a file called “docker-compose.yml” with the contents being shown in the following section and run it like a normal Docker Compose project.

docker-compose.yml

version: '3.6'

services:

proxy:

image: neilpang/nginx-proxy

network_mode: bridge

container_name: proxy

restart: on-failure

ports:

- 80:80

- 443:443

environment:

- ENABLE_IPV6=true

volumes:

- ./certs:/etc/nginx/certs

- /var/run/docker.sock:/tmp/docker.sock:ro

web1:

image: php:7.2-apache

network_mode: bridge

container_name: web1

restart: on-failure

environment:

- VIRTUAL_HOST=web1.com

web2:

image: php:7.2-apache

network_mode: bridge

container_name: web2

restart: on-failure

environment:

- VIRTUAL_HOST=web2.com

With Terraform

If you don’t have an ECS instance yet, then I recommend you to do it with Terraform, as Alibaba Cloud has an excellent support for it. Check this article to learn how to get Terraform for Alicloud up and running in your local machine first.

If you followed the instructions of that article to make Terraform work, it’s the moment to create the main.tf and user-data.sh:

main.tf

variable "access_key" {

type = "string"

default = "XXXXX"

}

variable "secret_key" {

type = "string"

default = "XXXXX"

}

variable "region" {

type = "string"

default = "ap-southeast-2"

}

variable "vswitch" {

type = "string"

default = "XXX-XXXXX"

}

variable "sgroups" {

type = "list"

default = [

"XX-XXXXX"

]

}

variable "name" {

type = "string"

default = "multi-tenant"

}

variable "password" {

type = "string"

default = "Test1234!"

}

provider "alicloud" {

access_key = "${var.access_key}"

secret_key = "${var.secret_key}"

region = "${var.region}"

}

data "alicloud_images" "search" {

name_regex = "^ubuntu_16.*_64"

}

data "alicloud_instance_types" "default" {

instance_type_family = "ecs.xn4"

cpu_core_count = 1

memory_size = 1

}

data "template_file" "user_data" {

template = "${file("user-data.sh")}"

}

resource "alicloud_instance" "web" {

instance_name = "${var.name}"

image_id = "${data.alicloud_images.search.images.0.image_id}"

instance_type = "${data.alicloud_instance_types.default.instance_types.0.id}"

vswitch_id = "${var.vswitch}"

security_groups = "${var.sgroups}"

internet_max_bandwidth_out = 100

password = "${var.password}"

user_data = "${data.template_file.user_data.template}"

}

output "ip" {

value = "${alicloud_instance.web.public_ip}"

}

user-data.sh

#!/bin/bash -v

# Create docker-compose.yml

cat <<- 'EOF' > /opt/docker-compose.yml

version: '3.6'

services:

proxy:

image: neilpang/nginx-proxy

network_mode: bridge

container_name: proxy

restart: on-failure

ports:

- "80:80"

- "443:443"

environment:

- ENABLE_IPV6=true

volumes:

- ./certs:/etc/nginx/certs

- /var/run/docker.sock:/tmp/docker.sock:ro

web1:

image: php:7.2-apache

network_mode: bridge

container_name: web1

restart: on-failure

environment:

- VIRTUAL_HOST=web1.com

web2:

image: php:7.2-apache

network_mode: bridge

container_name: web2

restart: on-failure

environment:

- VIRTUAL_HOST=web2.com

EOF

apt-get update && apt-get install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

apt-get update && apt-get install -y docker-ce docker-compose

curl -L https://github.com/docker/compose/releases/download/1.21.2/docker-compose-`uname -s`-`uname -m` -o /usr/bin/docker-compose

cd /opt && docker-compose up -d

Then, as usual, run terraform init, terraform plan and terraform apply to make it happen. Remember to wait about 4 to 5 minutes after you receive the confirmation from Terraform, as this is when the packages will start being installed.

Conclusion

We learnt how to efficiently share resources in a machine to run several services at the same time, which is going to save us some money and headaches in the beginning. The only thing you should remember is to keep track of the usage, as probably you will need to upgrade the ECS Instance if the sites gets more busy (by adding an extra CPU or increasing the RAM memory). This solution is great for tiny websites with not much traffic and makes you learn many hosting concepts and its intricacies. Don’t hesitate to reference this article in the forum if you didn’t understand anything.

Original article: Hosting Multiple Websites on a Single Alibaba Cloud ECS Server.